|

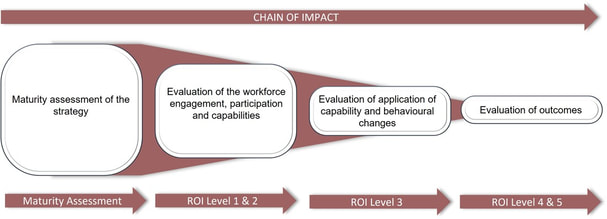

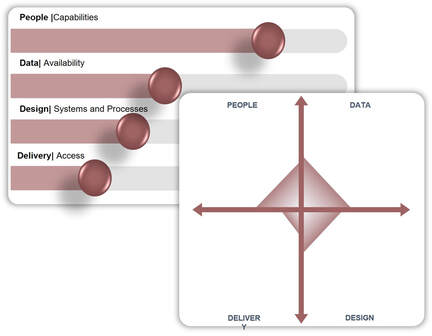

Evaluation is a critical part of any workforce capability strategy / program / training course. It's a tool that's used not only to measure the effectiveness and impact that training can have on a workforce but can also influence and improve the design and delivery of the program itself. Many in the learning and development world want to use evaluation to evidence impact in broad workforce metrics like employee retention, leadership satisfaction or diversity representation. But the truth is no single program or even a collection of programs can credibly claim that they have influenced these outcomes based on the delivery of the programs alone - hence the need to evaluate. Chain of impactI've seen many organisations leap to measuring overall workforce outcomes as a way of evaluating their training programs and get disheartened by the reality of the dial not shifting. What is always missing is a credible chain of impact. Donald Kirkpatrick was onto something those many years ago when he introduced the Kirkpatrick model for evaluation. Jack Phillips took it to the next level (pun intended) with the Phillips ROI methodology which I was proud to get certified in over a decade ago now. My evaluation framework is unapologetically built on these foundations but with my own added flare. Maturity AssessmentMy framework includes an assessment of maturity within the strategy or programs themselves. Looking at four components:

An assessment of maturity in these components measures the strength of the foundations that the strategy or programs are built on and provides a pathway for growth. See my post on maturity here Performance and PerceptionMost evaluations of learning programs are based on participant feedback alone. This is often captured as an after-event survey. Perception measures are of course critical to an evaluation, they measure experience and therefor effectiveness of a program. But alone they are a little one-sided. The inclusion of performance metrics is another way to add credibility to an evaluation and to create a more tangible link to organisation / workforce outcomes. Captured at all the ROI levels and measured in conjunction with the perception measures will provide a well-rounded holistic evaluation of the impact of a program. A full ROI evaluation isn't right or necessary for training program. They can be time consuming and costly to implement so organisations continue with their status quo of feedback forms and never truly know what impact their programs are having. And whilst a full ROI may not be necessary there are a few steps a Learning and Development team can do to improve the credibility of their evaluations without breaking the bank or adding to their workload. Step 1: Include level 1 performance dataLevel 1 is all about reaction (which is a perception measure) and participation. Every organisation will in some way capture participation on training programs. Either through a learning management system (LMS) or some bespoke register. Use this a performance measure but not just the volume of participants or even the percentage of completions. But measure this as a percentage of a programs target population. For example, if a program is targeted towards female leaders, measure the volume of actual participants as a percentage of the total female leadership workforce. This is a measure of reach and shows how effective the program has been at engaging the target workforce. 100% of participants may have reacted well to the program, but if those participants only represent 5% of the target population then can the program really be expected to change a dial at level 4? Step 2: Incorporate data collection in the delivery of the programSo many training programs still wait until the end or even after a training program has been delivered to capture participant feedback. It doesn't have to be this way! This method is guaranteed to yield low response rates which reduces the credibility of your evaluation. Incorporating data collection in parallel with training delivery is a really effective way of capturing participant reaction and learning (ROI levels 1 & 2) during the delivery of the program. Personally, I am finding the use of MS Forms (not intended to be a plug) a really effective way to capture data during workshops or training sessions. Build forms that include survey questions and measure before and after levels of capability. They take 2 minutes to complete and can be timed with the delivery of learning outcomes. More importantly you can see the analysis in real time and make any adjustments to your delivery on the go! Step 3: Set and action plan and FOLLOW UP!!I accept this requires capacity to do so. But if you ever want to achieve level 3 evaluation it really is down to you to set the expectation. Many training courses end with the "and tell us how you are going to apply these skills" bit. But how often is this followed up on? Formalise this component of training into an actual performance/action plan where a participant sets out a goal to apply their new capabilities within a 6-month window. Follow up and ask whether or not the goal was met, what were the effects or were there any barriers. You can then turn this into performance metrics with a % of participants who successfully applied capabilities in their day-to-day operations. Not only will this add credibility to your evaluation but will also help you improve the content of the training itself. Bonus step: Contact RMEASURES

I am certified in ROI evaluation methodologies with 20 years experience in HR strategy and program evaluation. I can help you analyse and evaluate the data already collected, combine it with performance metrics and recommend steps to take to improve your evaluation approach

1 Comment

I find it interesting when you talked about program evaluations for different sessions that would rely on feedback from participants which is usually done after the event. I can imagine how important those things are to improve your future events if you are continuously going to provide those kinds of services to different institutions or organizations. And I think that those that are wanting to have a good reputation and leave an impression that will last for a long time should rely on those types of feedback to strengthen what they can do and eliminate the witnesses they have.

Reply

Leave a Reply. |

WHAT I DOWHAT I'VE DONEWHO I AM

|

RMEASURESOwner: Richard McMorn

Entity Type: Sole Trader ABN: 28 825 984 791 |

RSS Feed

RSS Feed