|

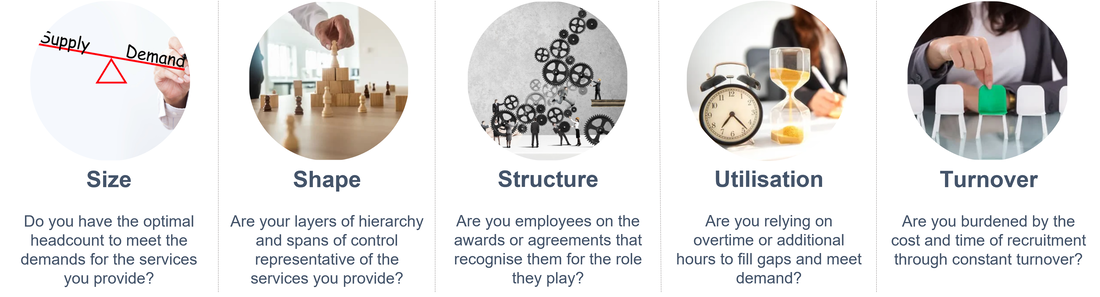

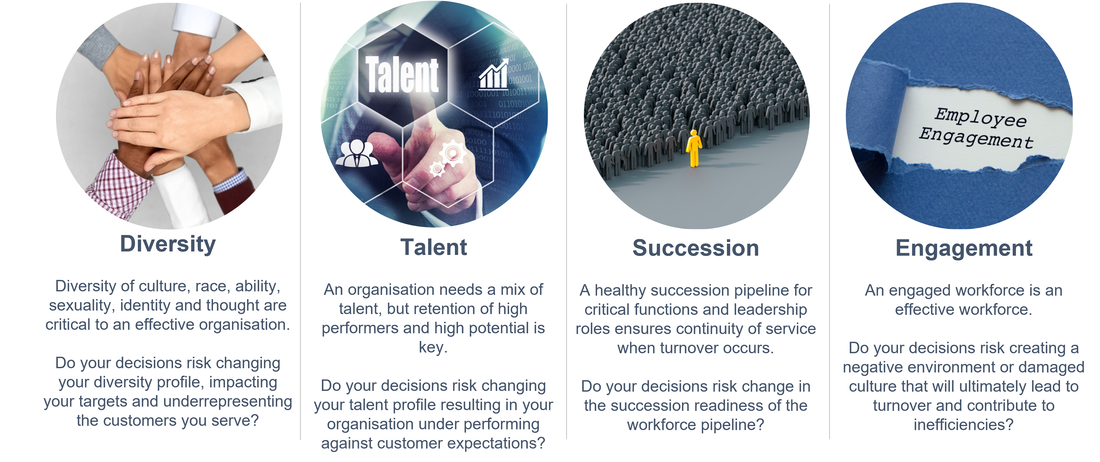

With the recent passing of the secure jobs better pay bill, companies and union groups will be gearing up for conversations on pay and awards. These conversations come with the inevitable topic of “efficiency” in order for an organisation to balance cost of wages against budgets. And that's fair! There are always improvements that can be made in the way an organisation operates to realise some savings that can be redirected to fair wages. Are those around the negotiating table well enough informed to make decisions on efficiencies without detrimentally impacting the organisations capacity to deliver results? What metrics are being used? Efficiencies can be found in the above measures where parts of the workforce fall outside a “desirable cost threshold". For example:

Decisions based on those measures alone can lead to recruitment freezes, restructures, reassignments or even redundancies all in the quest to balance cost. These actions can be brutal, unintentionally short sighted and have significant impact on the efficacy of your organisation. The value of the workforce goes far beyond its cost"efficacy" Noun, plural - the capacity for producing a desired result or effect In this context the capacity refers to your workforce. They are after all your greatest asset. Any decision that affects them will impact your effectiveness as an organisation so it is vital that efficacy measures are also on the negotiation table. Analytics are key to having informed conversations when it comes to secure jobs and better pay. No negation is complete without weighing up all the facts and risks. The efficiencies against the efficacy.

So tell me truthfully … are you ready for this conversation?

0 Comments

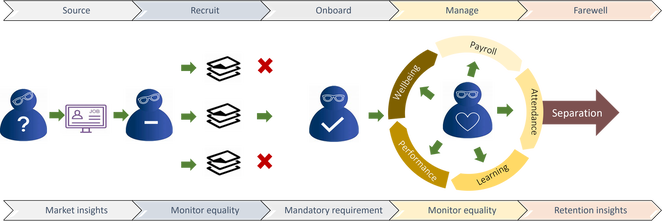

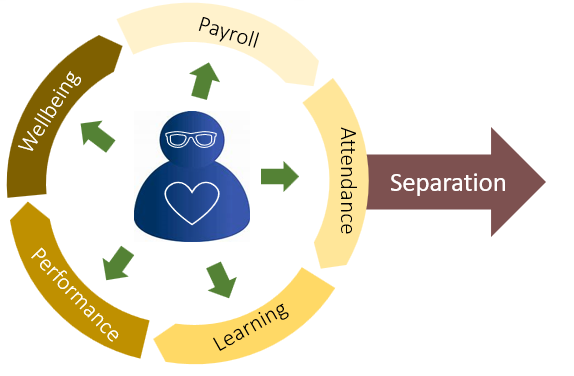

Part 2: Lifecycle experiencesThe lifecycle of an employee provides us with many opportunities to collect diversity data. The HR processes themselves are generating data on an employee so why not collect a little more........ right? Well, not always. The perceived value that diversity data can bring can blind us to the realities of governance. Leaving organisations exposed to the risk of a misuse of data, or a breach, albeit internal. Sourcing diverse talent Recruitment is increasingly becoming the preferred process for collecting diversity data. The values of this are appealing:

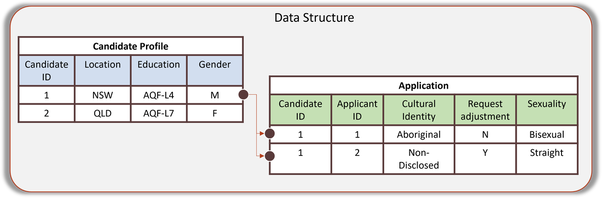

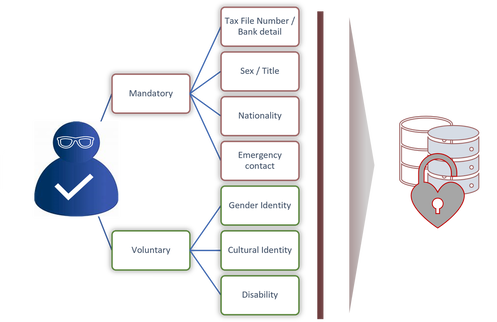

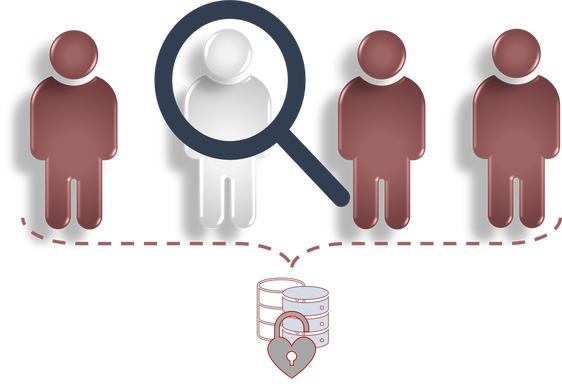

The default process for collecting applicant diversity application is within the application itself. There's probably a purpose statement along the lines of "commitment to equality" and a belief that the system storage of data in the process will inform all other downstream processes. But the data itself is tainted.... here's why: My biggest observation here is in the process design and system capability for collecting the data, clouded a perception of value. When a person clicks on an advert, they are requested to either log into and existing profile or create a new one from scratch before progressing with the application. Recruitment systems generally manage two broad processes. The first being the creation of a candidate profile (which stores as a data table) the second managing the application (which stores as another data table). One candidate can have multiple applications and more often than not diversity is asked within the application, rather than as part of the candidate profile. This creates duplicate rows and removes a single source of truth. My next observation is the purpose of collection and blurring of diversity "profile" and inclusive adjustments required for an application. Most application forms that are requesting D&I data do so with a statement that refers to the application and doesn't go as broad as to state the data is intended for uses in downstream processes. Unless carefully stated, any diversity data collected for the purpose of an application fulfills its purpose once the application has closed. The data should be destroyed or inaccessible for other use. As attractive as it is to collect diversity data through recruitment, if it's not done right, it can't be used. Onboarding successful candidates Onboarding is the process of converting a candidate into an employee and getting them set up for payroll. It is arguably the most crucial point of data collection. HR systems are improving in their functionality of connecting recruitment to payroll but even with interfaced systems there are requirements for data collection......so why not collect a little more...... right? My observation here is the expectation that people will provide the information purely because it's part of the onboarding process. The issue with this is that diversity information is not a requirement for onboarding. Yes, there are mandatory data elements that have a clear purpose of creating an employee payroll record. But this is not the same purpose as collecting diversity data for analysis, or monitoring equality in processes. Diversity completion percentages when the data is collected at onboarding is low. I don't claim to know the reason for this, but I suspect individuals are a little fatigued with the amount of data they need to provide for onboarding, and deep down they know diversity information isn't required, so don't feel compelled to provide it. They're even less likely to provide it if they already did during the application process. Managing and farewelling diverse talent By this stage there really should be no further need for the collection of diversity data. But as we've discovered response rates to diversity surveys are so low, organisations are faced with the constant challenge of maintaining diversity data and encouraging those who have not yet provided to do so - but to do so only once for all systems! Systems are improving, more and more organisations are moving away from the "best of breed" platforms that are specific to one process, to the "end-to-end" platforms with data flowing from module to module. My historical observations here have been in organisations that haven't had integrated or interfaced systems and have therefore requested employees to complete yet another diversity profile for another process and another system. This once again creates multiple records for an employee, and we lose the source of truth. More recently I have observed organisations opting not to have diversity as part of a secondary system profile because it has been collected at core. Instead, they rely on analyst to be able to join data from multiple systems to provide insights. Or those with more advanced interfaces between systems migrate the data from one platform to another. The issue here comes back to purpose. An employee may be willing to provide diversity data at onboarding or updating a profile after a campaign. But they might not be aware that reporting analyst has access to their data or that it is indeed moving from one system to another, nor are they aware of how long the data will be held and used for analytics after they leave. Without consent, this is a misuse of data. To sum up:

In part 3 I will draw on these lifecycle experiences and provide some recommendations as to how I think it can be done right....... more to come! When it comes to the collection and use of diversity data, every organisation I've worked with has done so to strive towards a diverse and inclusive workplace through tracking and analysing data. But even those with the purest of intentions must be questioning their approach in these seemingly never-ending headlines of data breaches. What has struck me the most is the recurring theme of personal and sensitive data being stored beyond the purpose for which it was collected. And this, in my view is the greatest hurdle for HR departments looking to collect diversity data, facing the questions:

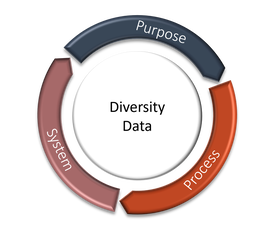

In this three-part blog I am going to explore this challenge and with my observations of 10 years of providing analysis of workforce diversity, see if I can come up with an approach that gets it right. Part 1: The ring of governanceBefore data is collected HR departments need to consider governance. This isn't an easy task and often not an area of expertise within HR. But data governance is a requirement in all aspects of people data. Diversity data is both personal and sensitive and should be classified as highly restricted in all HR departments. This classification requires extra steps be taken to ensure its safety and reduce the risk and impact of a breach. These steps include:

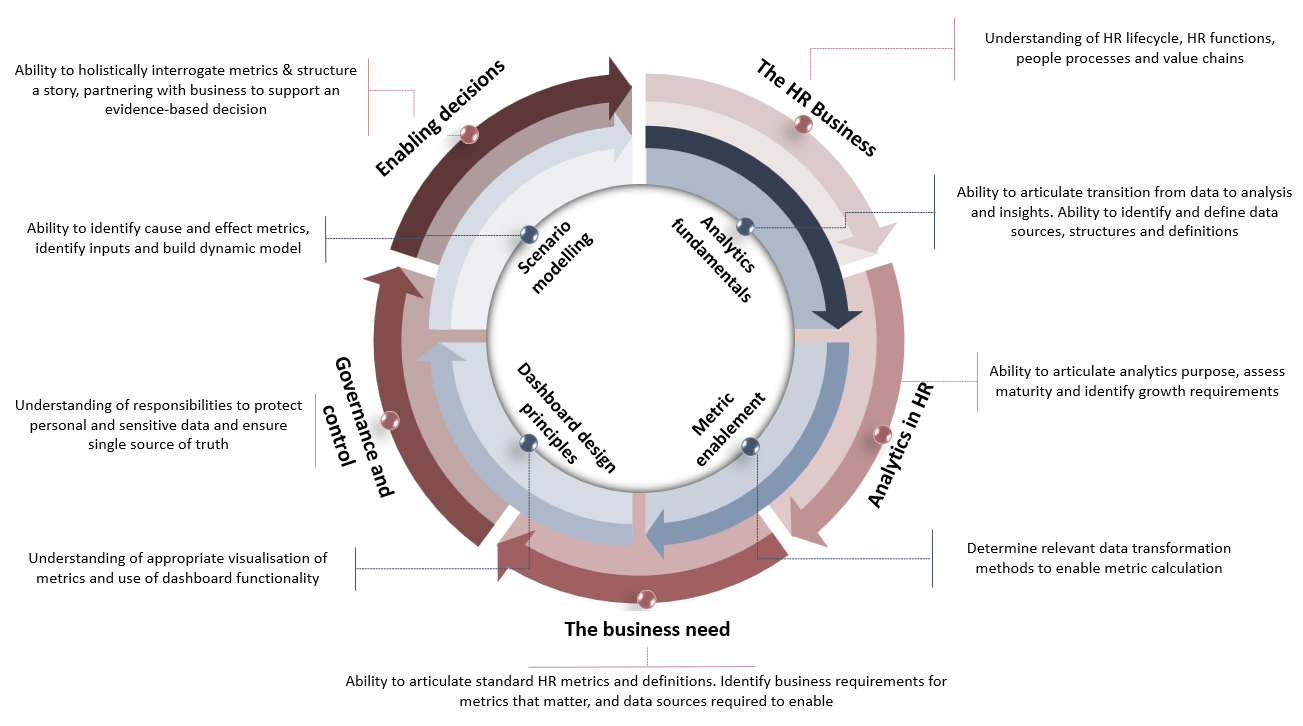

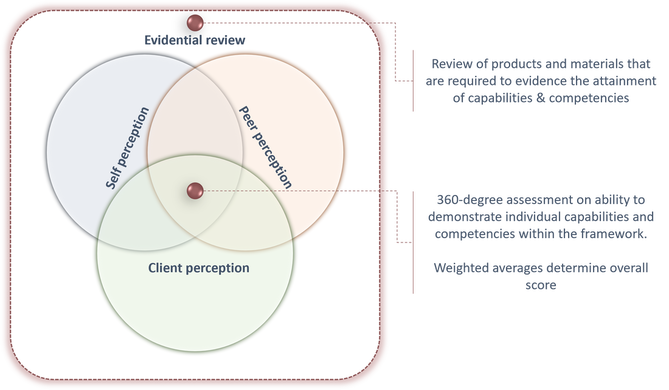

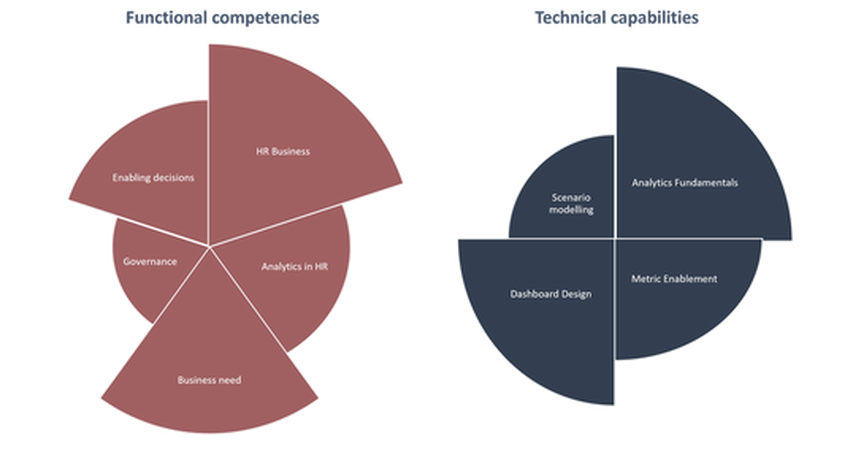

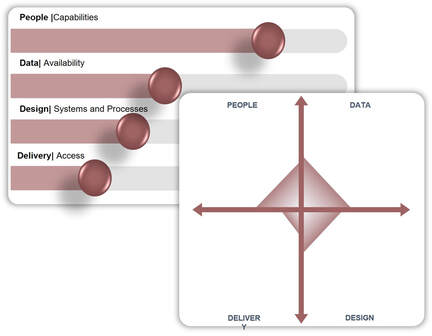

Defining the purpose for the data being collected. This is about transparency about the full extent to which the data is going to be used. A lot of organisations are good at declaring that data collected is confidential and won't be used to identify any individual. But they don't always extend the declaration to include that the data will be used for analysis and therefore accessible to reporting teams, or that it will be used to interface to other systems to create user profiles. The misuse of data, no matter how unintentional, is a breach. It's vital to make sure that the declaration of purpose includes all possible uses of the data for the duration of its lifecycle Identifying the process through which the data is collected. This refers to the methodology of data collection as well as the HR lifecycle process that it relates to. The most common methodology I have observed is the use of a "form" or module within the HR payroll system which is completed during or after the onboarding process. Increasingly organisations are trying to capture diversity data during the recruitment process and using this to pre-populate and employee profile. If this is done right, then great. If not, then it risks a breach. I'll explain more in part 2. Building confidence in the security of the systems used to store the collected data. HR systems are evolving at a rapid pace with big players such as Oracle, Workday, SuccessFactors and Cornerstone all offering "end-to-end" HR systems. End-to-end simply means that all HR processes can be executed from within a single "front end" platform. In other words, HR operations or line managers can do everything all in one place. The reality of these systems is that they are not truly end-to-end. Many are made up of modules that we're legacy best of breed systems acquired by the big players over time. Whether you have an end-to-end HRIS or a collection of systems, data needs to flow between them. The movement of data from one system to another can result in a breach. The potential of this movement needs to be understood, transparent and declared as part of the purpose of collection. Quality of the resulting diversity data The above governance considerations can have an impact on the structure and quality of the data produced. Which in turn impacts the analytical value when trying to evidence a diverse and inclusive workplace. There are some things that an analyst needs to be able to do their job well. This includes being able to access the data in its raw form without fear of a breach. The data being structured and stored in such a way that there is a single source of truth and not conflicting data across multiple systems or modules. Whilst having the ability to link this data to other system data through an identifiable field. All of these aspects need to be taken into consideration when collecting diversity data, no matter where in the lifecycle it is collected. In part 2 I share some of the observations I've made over the years of analysing and providing insights on diversity data. Throughout my career I've always strived towards improving my capabilities as a people (workforce) analyst. I've looked to many external capability frameworks or courses to help me identify areas of growth. What I always find are frameworks that focus on the technical capabilities of an analyst that pushes them from basic data management right through to predictive and prescriptive modelling all under the banner of "Data Science". Those who know me know I hate that term. Of course there's science in data analytics, but there's also art. I've even spoke about that in my musings on dashboard design principles. A lot of that art needs to come from deeper competencies in the functions of HR. I've really struggled to find a framework that caters for both the science and art of being a people analyst. So.... I've made my own! A "People Analytics" capability frameworkConsists not only of technical capabilities, but also the functional competencies required to become, in my view, an expert analyst in this field. I've often talked about the need to be able to overlay technical abilities with an understanding of HR. I credit my success more to my understanding of HR than to my technical abilities of an analyst. Yes I can do some pretty cool things with data, but more importantly I can make it meaningful within the context of people processes and enabling workforce planning decisions. The above illustrates the overarching framework consisting of four technical capability themes, and five functional competency themes. There are approximately 30 individual capabilities/competencies withing the framework which can flex or refine depending on a client's environment. I've also tried to illustrate the shift in importance or weighting of each of these themes. Early on in a People Analysts career their technical capabilities are more important than their functional competencies. But I believe this dial needs to shift as you grow in the field. Holistic approach to assessmentThe framework also includes detail of how capabilities can be evidenced through the materials and products created by the analysts. Similarly, a stakeholder assessment of their perception in delivering capabilities provides a holistic and quantifiable rating. For example:

Planning for growthThe assessment against these capabilities/competencies will identify areas of strength and opportunities for growth. This may include:

Now that I have developed a framework, I'm keen to test it! I'm searching for clients who will be willing to adopt this capability framework within their people analytics roles and work with me on assessing the capabilities of your analytics function and developing a plan for growth.

What you will get:

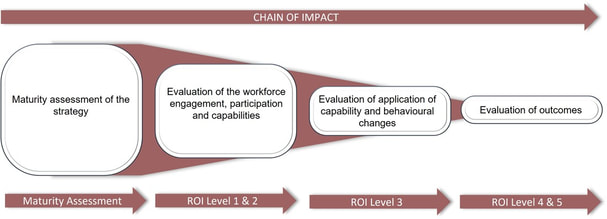

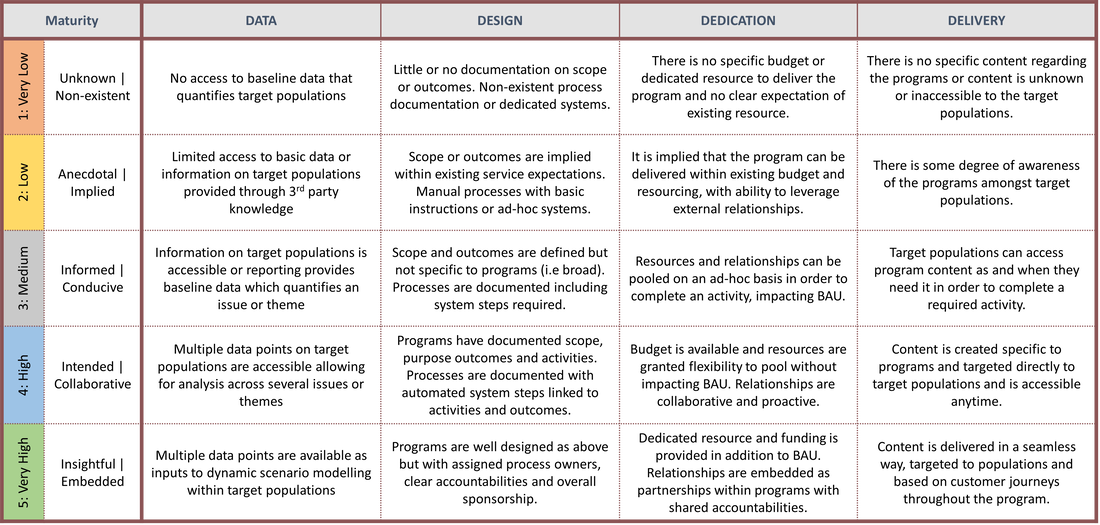

Please contact me if this is of interest to you Evaluation is a critical part of any workforce capability strategy / program / training course. It's a tool that's used not only to measure the effectiveness and impact that training can have on a workforce but can also influence and improve the design and delivery of the program itself. Many in the learning and development world want to use evaluation to evidence impact in broad workforce metrics like employee retention, leadership satisfaction or diversity representation. But the truth is no single program or even a collection of programs can credibly claim that they have influenced these outcomes based on the delivery of the programs alone - hence the need to evaluate. Chain of impactI've seen many organisations leap to measuring overall workforce outcomes as a way of evaluating their training programs and get disheartened by the reality of the dial not shifting. What is always missing is a credible chain of impact. Donald Kirkpatrick was onto something those many years ago when he introduced the Kirkpatrick model for evaluation. Jack Phillips took it to the next level (pun intended) with the Phillips ROI methodology which I was proud to get certified in over a decade ago now. My evaluation framework is unapologetically built on these foundations but with my own added flare. Maturity AssessmentMy framework includes an assessment of maturity within the strategy or programs themselves. Looking at four components:

An assessment of maturity in these components measures the strength of the foundations that the strategy or programs are built on and provides a pathway for growth. See my post on maturity here Performance and PerceptionMost evaluations of learning programs are based on participant feedback alone. This is often captured as an after-event survey. Perception measures are of course critical to an evaluation, they measure experience and therefor effectiveness of a program. But alone they are a little one-sided. The inclusion of performance metrics is another way to add credibility to an evaluation and to create a more tangible link to organisation / workforce outcomes. Captured at all the ROI levels and measured in conjunction with the perception measures will provide a well-rounded holistic evaluation of the impact of a program. A full ROI evaluation isn't right or necessary for training program. They can be time consuming and costly to implement so organisations continue with their status quo of feedback forms and never truly know what impact their programs are having. And whilst a full ROI may not be necessary there are a few steps a Learning and Development team can do to improve the credibility of their evaluations without breaking the bank or adding to their workload. Step 1: Include level 1 performance dataLevel 1 is all about reaction (which is a perception measure) and participation. Every organisation will in some way capture participation on training programs. Either through a learning management system (LMS) or some bespoke register. Use this a performance measure but not just the volume of participants or even the percentage of completions. But measure this as a percentage of a programs target population. For example, if a program is targeted towards female leaders, measure the volume of actual participants as a percentage of the total female leadership workforce. This is a measure of reach and shows how effective the program has been at engaging the target workforce. 100% of participants may have reacted well to the program, but if those participants only represent 5% of the target population then can the program really be expected to change a dial at level 4? Step 2: Incorporate data collection in the delivery of the programSo many training programs still wait until the end or even after a training program has been delivered to capture participant feedback. It doesn't have to be this way! This method is guaranteed to yield low response rates which reduces the credibility of your evaluation. Incorporating data collection in parallel with training delivery is a really effective way of capturing participant reaction and learning (ROI levels 1 & 2) during the delivery of the program. Personally, I am finding the use of MS Forms (not intended to be a plug) a really effective way to capture data during workshops or training sessions. Build forms that include survey questions and measure before and after levels of capability. They take 2 minutes to complete and can be timed with the delivery of learning outcomes. More importantly you can see the analysis in real time and make any adjustments to your delivery on the go! Step 3: Set and action plan and FOLLOW UP!!I accept this requires capacity to do so. But if you ever want to achieve level 3 evaluation it really is down to you to set the expectation. Many training courses end with the "and tell us how you are going to apply these skills" bit. But how often is this followed up on? Formalise this component of training into an actual performance/action plan where a participant sets out a goal to apply their new capabilities within a 6-month window. Follow up and ask whether or not the goal was met, what were the effects or were there any barriers. You can then turn this into performance metrics with a % of participants who successfully applied capabilities in their day-to-day operations. Not only will this add credibility to your evaluation but will also help you improve the content of the training itself. Bonus step: Contact RMEASURES

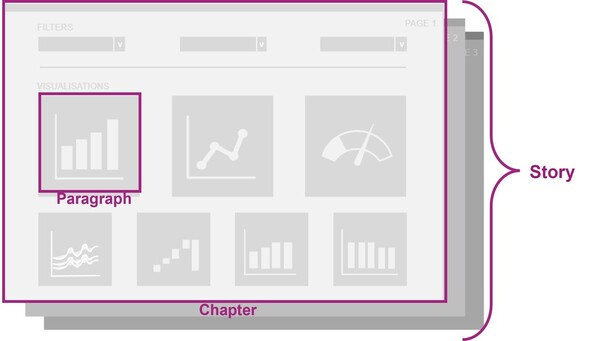

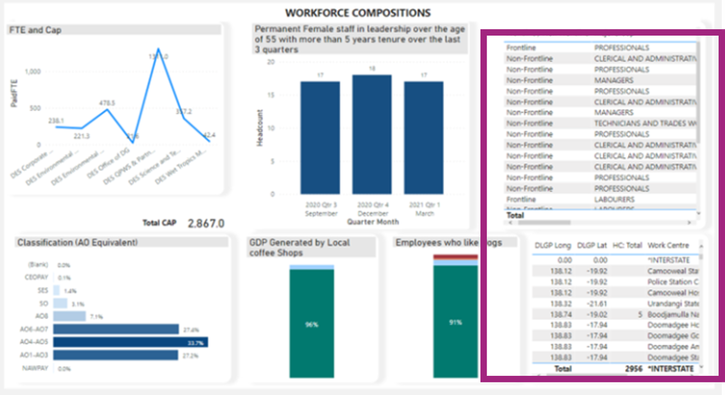

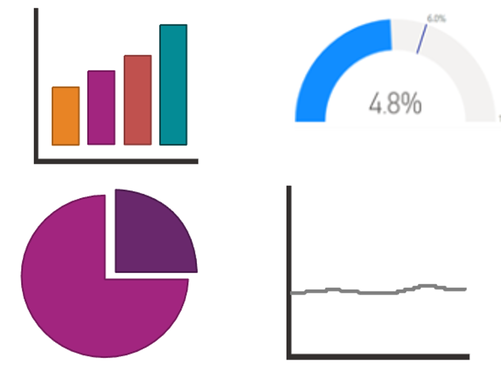

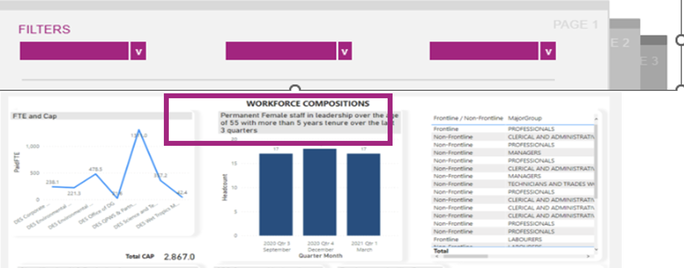

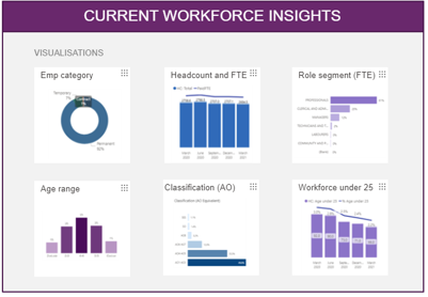

I am certified in ROI evaluation methodologies with 20 years experience in HR strategy and program evaluation. I can help you analyse and evaluate the data already collected, combine it with performance metrics and recommend steps to take to improve your evaluation approach Dashboards are a collection of visuals that convey a coherent message. Many would say that the role of building a dashboard is the role of a data scientist. I would say it’s the role of a creator, after all, it is art sweety! But even the most abstract of artists will have some rules or principles to follow otherwise the message or meaning they are trying to convey is lost. I feel the same about dashboards, so I thought I’d share some of my own rules: 1) Create for the ConsumerAs data scientists we can be tempted to flex our analytical muscle and produce something that is ….. well….. scientific. But those who are consuming the analytics are much less likely to be a data scientists, they wouldn’t need us if they were. Curb the enthusiasm and always keep the consumer in mind. Build a dashboard that talks to their understanding of business process and their expectations of metrics. Keep it simple and it will succeed. 2) Its just like reading a bookA story is a collection of chapters and paragraphs. If those paragraphs all talk about something different and the chapters don’t follow a certain order, then the story just isn’t a story. The same applies to dashboards. Let's assume a dashboard has a number of pages, these pages are chapters of a book and needs to tell one part of the story. Within each page there are visual metrics in tiles, each tile is a paragraph, which needs to be consistent in theme. Without this structure a consumer of a dashboard will not be able to easily understand what it is trying to say and won't be able to get any insights from it - which is it's entire purpose. 3) No time for tablesControversial I know but if you want tables open a spreadsheet. The tiles on dashboards need to be able to communicate the metric at first glance. If a consumer needs to ask “what is this chart saying” then the tile has failed. Do not compensate for failure by including a table. Tables take time to read and digest and quite simply take up too much space. By all means enable drill to detail functionality or exporting of underlying data but keep the tile visual. It's just more pleasing to the eye. 4) Pick the right visualThis may sound like a no brainer but too often have I seen a chart misused, overcluttered, over complicated or just plain unbearable! Visuals are there to fulfill an analytical task such as comparing values, showing the percentage composition of a total, tracking a trend over time or understanding the relationship between value set. The visuals that are made available to us lend themselves well to that task – so pick the right one, convey a simple message and for the love of analytics limit your use of pies! 5) Filters find the factsIt may seem I'm contradicting my first principle by not building a tile with a consumer requested specific metric. I'm a firm believer in building consumer capability and encouraging them to interact with the functionality of a dashboard to find the fact they are looking for. Filters are a great way of making sure a single dashboard can tell multiple variations of the same story – use them, make your consumers use them, and their capability will grow. 6) The rule of sixMy brain just can't take much more than that, nor can the page. I do believe chapter can be told in 6 paragraphs, there are after all only so many metrics you can squeeze out of a theme. Any more than that and you risk repeating yourself and discouraging the use of filters. This is also a real estate issue, if you start cluttering your page with more than 6 tiles you're going to lose the eye of the consumer and aint nobody got room for that. These rules or principles are of course flexible, there's always room for artistic license for the purpose of story telling. If you're on a journey of dashboard creation and want some help in making sure they work for your consumers, then please do reach out. I'm always happy to share opinions.

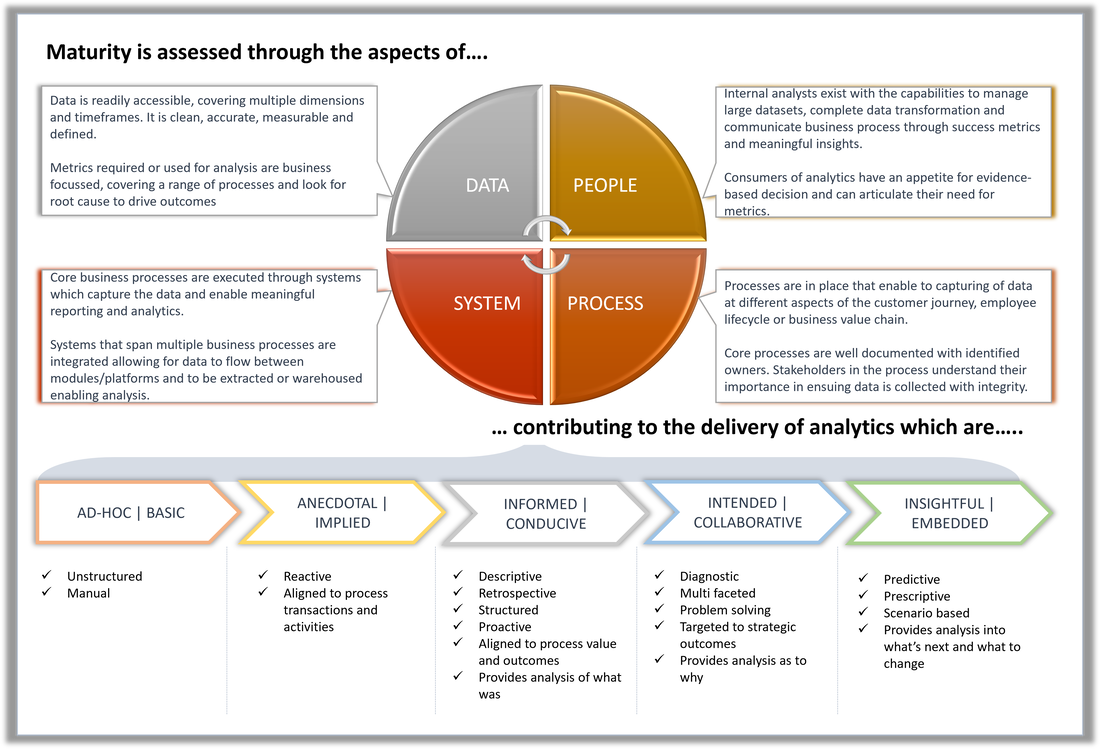

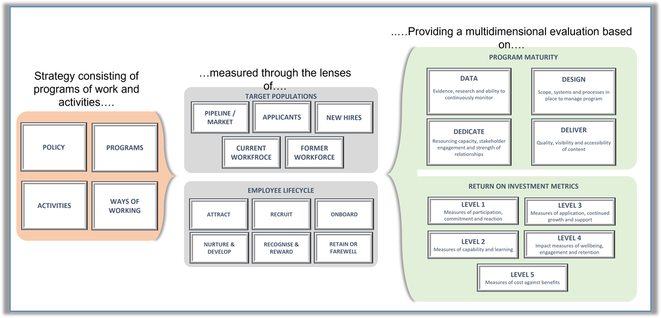

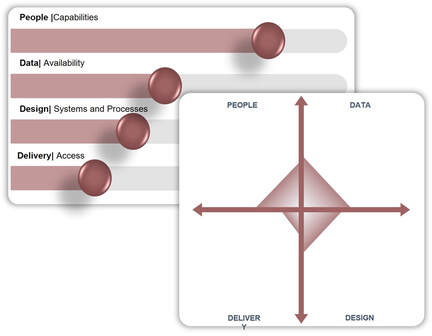

P.s did you notice how many rules I have? I have spent much of my career in HR Analytics focusing on building organisational analytics capability and maturity growth. I have been influenced by the many maturity models that exist (along with some brilliant people I have worked with over the years) and have developed my own way of assessing and striving towards maturity. Whilst these models are great, they do have a focus on the maturity (or rather the type) of the analytics delivered and not so much about what it takes for an organisation to be able to deliver analytics at any level of maturity. In my view there are four key components to achieving analytics maturity within an organisation and all need investment for growth. The level of maturity in each component with then influence the type of analytics that can be produced. This is illustrated below. Many organisations struggle to grasp that growth is required across all aspects simultaneously if they are to realise their dream of insightful or embedded analytics. To achieve that, significant investment is needed and too often avoided through the hiring of a skilled analysts or data scientist who is expected to perform miracles. Analysts are also often called upon to use analytics to help evidence or evaluate the success of workforce strategies. I certainly have been! And just like the above, I've developed models and frameworks to help me along the way. The term strategy is mostly an umbrella term for a collection of policies, programs, activities or ways of working. The evaluation model seeks to categorise these through the lenses of those who it is aimed at (target populations) and the HR processes affected by it. This is after all the way we identify the participants and success measures for the strategy. Illustrated below. Maturity features once again here, not in terms of analytics but in terms of the maturity of the programs being delivered. In my earlier stages of career, I would have simply identified which standard HR metrics are aligned to the HR lifecycle and said these were the measures of success. But that's just business as usual reporting. The focus needs to be not just on the desired outcomes of a strategy, but on its own level of maturity! As this is the ultimate leading measure into how effective a strategy will be. It also helps manage expectation of when a strategy is likely to achieve desired outcomes. If an organisation isn't mature enough in its data, design, dedication and delivery of the strategy, how can it be expected to succeed? I've since developed a maturity model for the assessment of strategies and built this into the evaluation framework above and detailed below. Assessing maturity, whether it be analytics or workforce strategy, is critical for an organisation striving to achieve workforce / people outcomes. I've had conversations with clients that say "we don't need a toolkit to know we're not mature" and just continue throwing new "strategies" at the problem. A proper assessment of maturity will enable an organisation to identify where specific areas of growth are needed, where existing maturity can be leveraged or where investments are likely to yield a return. This action plan may well become a strategy of its own and may force an organisation to look within itself for improvements before expecting to see them in their originally intended outcomes. But every building, no matter how tall it wants to be, needs a solid foundation to grow from.

|

WHAT I DOWHAT I'VE DONEWHO I AM

|

RMEASURESOwner: Richard McMorn

Entity Type: Sole Trader ABN: 28 825 984 791 |

RSS Feed

RSS Feed